Change effect

Other functionality that we can add to our application is the ability to change the background of the local video. This is important to protect the user's privacy, since the video will be processed locally and the background will be blurred or replaced by a static image.

The feature will detect the user's figure and separate the background from the user. Then, it will apply a filter to the background to change the effect of the video.

In this lesson we will apply two different effects:

Blur: The background will be blurred.

Background replacement: The background will be replaced by a static image.

You can download the starter code from Step.05-Exercise-Change-effect.

Effect enumeration

We will start by defining a new enumeration that will contain all possible

states for the effect. Add a new file src/types/Effect.ts with the following

content:

export enum Effect {

None = 'none',

Blur = 'blur',

Overlay = 'overlay',

}

Settings interface

Now we will add a new parameter to the settings. Open the

src/types/Settings.ts file and add the new property to store the effect:

import {type MediaDeviceInfoLike} from '@pexip/media-control';

import {type Effect} from './Effect';

export interface Settings {

audioInput: MediaDeviceInfoLike | undefined;

audioOutput: MediaDeviceInfoLike | undefined;

videoInput: MediaDeviceInfoLike | undefined;

effect: Effect;

}

LocalStorageKey enum

We will open the src/types/LocalStorageKey.ts file and add a new key to store

the effect in the local storage.

Effect = `${prefix}effect`;

Get Video Processor

We will define a new file src/utils/video-processor.ts that will export a

function to get a video processor with the selected effect.

We will start by importing the necessary functions and types:

import {

type ProcessVideoTrack,

type VideoProcessor,

createCanvasTransform,

createSegmenter,

createVideoProcessor,

createVideoTrackProcessor,

createVideoTrackProcessorWithFallback,

} from '@pexip/media-processor';

import {type Effect} from '../types/Effect';

Define the NavigatorUABrandVersion interface to store the brand and version of

the browser:

interface NavigatorUABrandVersion {

brand: string;

version: string;

}

Define the NavigatorUAData interface to store the brands, mobile, and platform

of the browser:

interface NavigatorUAData {

brands: NavigatorUABrandVersion[];

mobile: boolean;

platform: string;

}

Define a new function isChromium that will return true if the browser is

based on Chromium:

const isChromium = (): boolean => {

if ('userAgentData' in navigator) {

const {brands} = navigator.userAgentData as NavigatorUAData;

return Boolean(brands.find(({brand}) => brand === 'Chromium'));

}

return false;

};

Define a new exported function getVideoProcessor that will return a

VideoProcessor with the effect. We will apply this processor later to the

local video stream:

export const getVideoProcessor = async (

effect: Effect,

): Promise<VideoProcessor> => {

const delegate = isChromium() ? 'GPU' : 'CPU';

// Setting the path to that `@mediapipe/tasks-vision` assets

// It will be passed direct to

// [FilesetResolver.forVisionTasks()](https://ai.google.dev/edge/api/mediapipe/js/tasks-vision.filesetresolver#filesetresolverforvisiontasks)

const tasksVisionBasePath = '../wasm';

const segmenter = createSegmenter(tasksVisionBasePath, {

modelAsset: {

path: '../models/selfie_segmenter.tflite',

modelName: 'selfie',

},

delegate,

});

const transformer = createCanvasTransform(segmenter, {

effects: effect,

backgroundImageUrl: '../backgrounds/background.png',

});

const getTrackProcessor = (): ProcessVideoTrack => {

// Feature detection if the browser has the `MediaStreamProcessor` API

if ('MediaStreamTrackProcessor' in window) {

return createVideoTrackProcessor(); // Using the latest Streams API

}

return createVideoTrackProcessorWithFallback(); // Using the fallback implementation

};

const processor = createVideoProcessor([transformer], getTrackProcessor());

// Start the processor

await processor.open();

return processor;

};

App component

We will open the src/App.tsx file and implement the logic to apply the effect

to the local video stream and send it to the conference.

We will start by importing the necessary functions and types:

import {Effect} from './types/Effect';

import {type VideoProcessor} from '@pexip/media-processor';

import {getVideoProcessor} from './utils/video-processor';

Define a new variable to store the video processor. We will redefine this variable each time the effect changes:

let videoProcessor: VideoProcessor;

Define two states to manage the effect and the processed stream:

const [processedStream, setProcessedStream] = useState<MediaStream>();

const [effect, setEffect] = useState<Effect>(

(localStorage.getItem(LocalStorageKey.Effect) as Effect) ?? Effect.None,

);

The goal of each of these states is:

processedStream: We will use this state to store the processed stream with the effect applied.effect: We will use this state to store the current effect.

Now we will make some modifications to the handleStartConference function to

apply the effect to the local video stream before joining the conference.

- Get the processed stream: We will use the

getProcessedStreamfunction to apply the effect to the local video stream (we will define it later). - Change the

localVideoStreamand useprocessedStreaminstead: When we call to theinfinityClient.callwe will use the tracks fromprocessedStreaminstead of thelocalVideoStream. - In case of an error, set the

processedStreamtoundefined.

const handleStartConference = async (

nodeDomain: string,

conferenceAlias: string,

displayName: string

): Promise<void> => {

...

const processedStream = await getProcessedStream(localVideoStream, effect)

setProcessedStream(processedStream)

const response = await infinityClient.call({

callType: ClientCallType.AudioVideo,

node: nodeDomain,

conferenceAlias,

displayName,

bandwidth: 0,

mediaStream: new MediaStream([

...localAudioStream.getTracks(),

...processedStream.getTracks()

])

})

if (response != null) {

if (response.status !== 200) {

localAudioStream.getTracks().forEach((track) => {

track.stop()

})

localVideoStream.getTracks().forEach((track) => {

track.stop()

})

setLocalAudioStream(undefined)

setLocalVideoStream(undefined)

setProcessedStream(undefined)

}

...

}

We will modify the handleVideoMute function to stop the tracks and set the

processedStream to undefined when the video is muted. In case the video is

unmuted, we will change the processedStream to the new processed stream. In

this last case, we will also send the new audio and processed stream to Pexip

Infinity:

const handleVideoMute = async (mute: boolean): Promise<void> => {

if (mute) {

localVideoStream?.getTracks().forEach(track => {

track.stop();

});

setLocalVideoStream(undefined);

setProcessedStream(undefined);

} else {

const localVideoStream = await navigator.mediaDevices.getUserMedia({

video: {deviceId: videoInput?.deviceId},

});

setLocalVideoStream(localVideoStream);

const processedStream = await getProcessedStream(localVideoStream, effect);

setProcessedStream(processedStream);

infinityClient.setStream(

new MediaStream([

...(localAudioStream?.getTracks() ?? []),

...processedStream.getTracks(),

]),

);

}

await infinityClient.muteVideo({muteVideo: mute});

};

We will modify the function handleSettingsChange to manage the effect changes.

- Define the new variable

newProcessedStreamto store the new processed stream. - Get the new processed stream if the video input has changed.

- Get the new processed stream if the effect has changed although the video input has not changed.

- Send the new audio and processed stream to Pexip Infinity.

Here are the modifications to the handleSettingsChange function:

const handleSettingsChange = async (settings: Settings): Promise<void> => {

let newAudioStream: MediaStream | null = null

let newVideoStream: MediaStream | null = null

let newProcessedStream: MediaStream | null = null

...

// Get the new video stream if the video input has changed

if (settings.videoInput !== videoInput) {

...

newProcessedStream = await getProcessedStream(

localVideoStream,

settings.effect

)

setProcessedStream(newProcessedStream)

}

// Set the new effect if it has changed

if (settings.effect !== effect) {

setEffect(settings.effect)

localStorage.setItem(LocalStorageKey.Effect, settings.effect)

// Get the new processed stream if the effect has changed

if (settings.videoInput === videoInput && localVideoStream != null) {

newProcessedStream = await getProcessedStream(

localVideoStream,

settings.effect

)

setProcessedStream(newProcessedStream)

}

}

// Send the new audio and processed stream to Pexip Infinity

if (newAudioStream != null || newProcessedStream != null) {

infinityClient.setStream(

new MediaStream([

...(newAudioStream?.getTracks() ??

localAudioStream?.getTracks() ??

[]),

...(newProcessedStream?.getTracks() ??

processedStream?.getTracks() ??

[])

])

)

}

...

}

We will modify the refreshDevices function to return also the effect:

const refreshDevices = async (): Promise<Settings> => {

...

return {

audioInput,

audioOutput,

videoInput,

effect

}

}

During this lesson, we called several time to the function getProcessedStream.

Now is time to define it:

const getProcessedStream = async (

localVideoStream: MediaStream,

effect: Effect,

): Promise<MediaStream> => {

if (videoProcessor != null) {

videoProcessor.close();

videoProcessor.destroy().catch(console.error);

}

videoProcessor = await getVideoProcessor(effect);

const processedStream = await videoProcessor.process(localVideoStream);

return processedStream;

};

Finally, we will make some modifications to the Conference component to assign

the processedStream (instead of the localVideoStream) to the

localVideoStream and add the effect to the settings:

<Conference

localVideoStream={processedStream}

remoteStream={remoteStream}

devices={devices}

settings={{

audioInput,

audioOutput,

videoInput,

effect

}}

onAudioMute={handleAudioMute}

onVideoMute={handleVideoMute}

onSettingsChange={handleSettingsChange}

onDisconnect={handleDisconnect}

/>

SettingsModal component

We will open the src/components/Conference/SettingsModal/SettingsModal.tsx

file and add a new button to change the effect of the local video.

We will start by adding Box, Icon and IconTypes to the import from the

@pexip/components package:

import {Box, Button, Icon, IconTypes, Modal, Video} from '@pexip/components';

We will add the rest of imports:

import {Effect} from '../../../types/Effect';

import {type VideoProcessor} from '@pexip/media-processor';

import {getVideoProcessor} from '../../../utils/video-processor';

import {Loading} from '../../Loading/Loading';

We will define a new variable to store the video processor. We will redefine this variable each time the effect changes:

let videoProcessor: VideoProcessor;

We will define the following states to manage the processed stream, and the loaded video:

const [processedStream, setProcessedStream] = useState<MediaStream>();

const [loadedVideo, setLoadedVideo] = useState(false);

const [effect, setEffect] = useState<Effect>(Effect.None);

The goal of each of these states is:

processedStream: This state will store the processed stream with the effect applied.loadedVideo: We will use this state to show a loading spinner while the video is being processed.effect: This state will store the chosen effect.

We will define a new function handleEffectChange that will apply the effect to

the local video stream. This function will be called each time the user pushes

the button to change the effect:

const handleEffectChange = (effect: Effect): void => {

setEffect(effect);

if (localStream != null) {

setLoadedVideo(false);

getProcessedStream(localStream, effect)

.then(stream => {

setProcessedStream(stream);

})

.catch(console.error);

}

};

We will modify the handleSave function to send the new effect to the parent

component:

const handleSave = (): void => {

props

.onSettingsChange({

audioInput,

audioOutput,

videoInput,

effect,

})

.catch(console.error);

props.onClose();

};

In a similar way to the App component, we will define a new function

getProcessedStream that will apply the effect to the local video stream:

const getProcessedStream = async (

localVideoStream: MediaStream,

effect: Effect,

): Promise<MediaStream> => {

if (videoProcessor != null) {

videoProcessor.close();

videoProcessor.destroy().catch(console.error);

}

videoProcessor = await getVideoProcessor(effect);

const processedStream = await videoProcessor.process(localVideoStream);

return processedStream;

};

We will modify the useEffect hook to apply the effect to the local video each

time the SettingsModal is opened:

useEffect(() => {

const bootstrap = async (): Promise<void> => {

if (props.isOpen) {

...

setEffect(props.settings.effect)

const processedStream = await getProcessedStream(

localStream,

props.settings.effect

)

setProcessedStream(processedStream)

} else {

...

}

}

bootstrap().catch(console.error)

}, [props.isOpen])

Now will move to the rendering part of the component and indicate what to show when the modal is opened.

To improve the user experience, we will show a loading spinner while the video is being processed:

{!loadedVideo && (

<Box className="LoadingVideoPlaceholder">

<Loading />

</Box>

)}

And once the video is loaded (onLoadedData event), we will show the video by

changing the display style property from none to block:

{processedStream != null && (

<Video

srcObject={processedStream}

isMirrored={true}

onLoadedData={() => {

setLoadedVideo(true)

}}

style={{ display: loadedVideo ? 'block' : 'none' }}

/>

)}

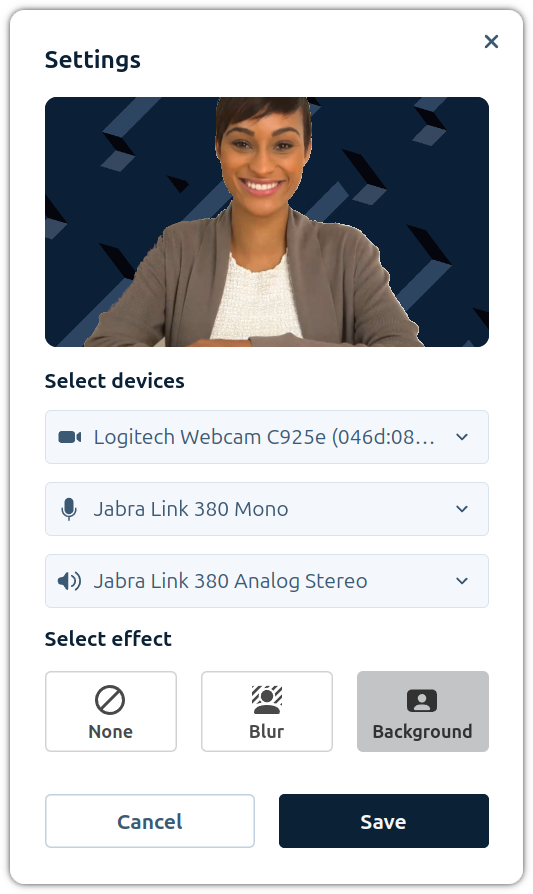

Now we will define three buttons to change the effect of the local video:

<div className="EffectSelectionContainer">

<h4>Select effect</h4>

<div className="ButtonSet">

<Button

variant="bordered"

size="large"

isActive={effect === Effect.None}

onClick={() => {

handleEffectChange(Effect.None)

}}

>

<div className="ButtonInner">

<Icon source={IconTypes.IconBlock} />

<p>None</p>

</div>

</Button>

<Button

variant="bordered"

size="large"

isActive={effect === Effect.Blur}

onClick={() => {

handleEffectChange(Effect.Blur)

}}

>

<div className="ButtonInner">

<Icon source={IconTypes.IconBackgroundBlur} />

<p>Blur</p>

</div>

</Button>

<Button

variant="bordered"

size="large"

isActive={effect === Effect.Overlay}

onClick={() => {

handleEffectChange(Effect.Overlay)

}}

>

<div className="ButtonInner">

<Icon source={IconTypes.IconMeetingRoom} />

<p>Background</p>

</div>

</Button>

</div>

</div>

Run the app

When you run the app, you will see a new button in the interface. If you click on it, you will see a new modal dialog to select the camera, microphone and speaker. If you can the camera, you will see that the local video will be updated with the new camera.

Once the changes are saved, the new devices will be used in the conference.

You can compare your code with the solution in Step.05-Solution-Change effect. You can also check the differences with the previous lesson in the git diff.